TO_DO:基础知识

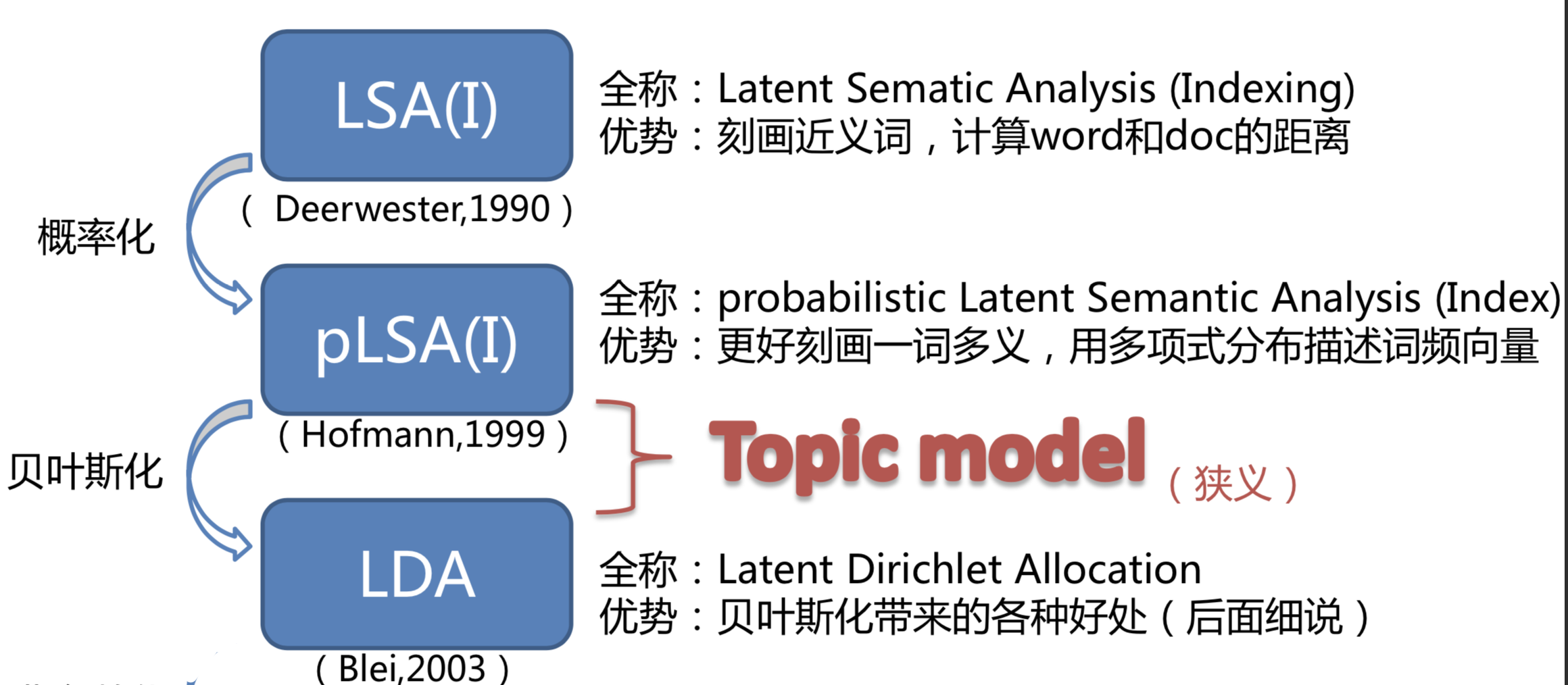

plsa—涉及文档和主题和词,主题为隐变量,概率图模型,通过建模KL散度,用EM算法求解。

使用例子:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37import jieba

# 创建停用词列表

def stopwordslist():

stopwords = [line.strip() for line in open('./data/stopwords.txt',encoding='UTF-8').readlines()]

return stopwords

# 对句子进行中文分词

def seg_depart(sentence):

# 对文档中的每一行进行中文分词

# print("正在分词")

sentence_depart = jieba.cut(sentence.strip())

# 创建一个停用词列表

stopwords = stopwordslist()

# 输出结果为outstr

outstr = ''

# 去停用词

for word in sentence_depart:

if word not in stopwords:

if word != '\t':

outstr += word

outstr += " "

return outstr

# 给出文档路径

filename = "./data/cnews.train_preprocess.txt"

outfilename = "./data/cnews.train_jieba.txt"

inputs = open(filename, 'r', encoding='UTF-8')

outputs = open(outfilename, 'w', encoding='UTF-8')

# 将输出结果写入ou.txt中

for line in inputs:

line_seg = seg_depart(line)

outputs.write(line_seg + '\n')

outputs.close()

inputs.close()

print("删除停用词和分词成功!!!")

1 | from gensim import corpora, models, similarities |